Lemmatization with NLTK involves utilizing the WordNetLemmatizer) method, drastically improving NLP and Machine Learning by reducing redundancy and improving accuracy. It is crucial that correct Part of Speech (POS) tags are assigned, as inaccurate tags can lead to incorrect lemmatization. NLTK.pos_tag together with word_tokenize can maximize precision. The choice between lemmatization and stemming depends on the particular requirements of the task at hand. Thorough understanding and execution of NLTK's Word Tokenization also play a key role in ensuring proper lemmatization. Progressing further will reveal a greater depth of analysis and optimization techniques.

Key Takeaways

- Begin with importing the nltk library and downloading wordnet, a lexical database for the English language.

- Use the WordNetLemmatizer() method from nltk.stem to instantiate a lemmatizer.

- Apply the nltk.word_tokenize function to break down the text into individual words.

- If needed, use nltk.pos_tag to assign part-of-speech tags to the words for better lemmatization results.

- Apply the lemmatizer to each token using the lemmatize() function.

Understanding NLTK Lemmatization Basics

To delve into the realm of NLTK Lemmatization, it's crucial to understand its fundamentals, starting with the primary tool used – the WordNetLemmatizer() method, which offers an analytical approach to word processing by considering the context of each word. This method is central to the lemmatization workflow, a process that reduces inflected or derived words to their base or root form, thereby improving the efficiency of text processing in Natural Language Processing (NLP) and Machine Learning applications. The benefits of lemmatization are manifold, including reducing data redundancy, enhancing text comprehension, and improving the accuracy of information retrieval systems. By understanding and effectively leveraging the WordNetLemmatizer() method, one can fully capitalize on the salient benefits of lemmatization.

Role of Part of Speech Tagging in NLTK Lemmatization

In the realm of NLTK Lemmatization, the role of Part of Speech (POS) tagging is pivotal as it assigns syntactic categories to words, thereby enhancing the accuracy and effectiveness of the lemmatization process. POS tagging benefits in lemmatization accuracy by providing relevant grammatical clues, which help the system to correctly identify the base form of words. This process is particularly important for words with multiple meanings or forms, as the context provided by POS tagging can distinguish between different usages. However, the impact of incorrect POS tags on lemmatization can be detrimental, leading to inaccurate word base form determination, thereby reducing the overall effectiveness of the lemmatization process. Thus, accurate POS tagging is crucial for successful lemmatization.

Exploring NLTK Lemmatization Techniques

Having established the importance of accurate Part of Speech tagging in NLTK Lemmatization, we now turn our attention towards the various techniques employed in NLTK Lemmatization. One technique involves the use of WordNetLemmatizer() method, which uses contextual analysis to lemmatize words. This technique is known for its precise and efficient lemmatization strategies, as it analyses each word based on its context. Another technique involves using NLTK.pos_tag method together with word_tokenize to classify words based on Part of Speech Tags. This method provides a more in-depth analysis of each word's role in a sentence, further enhancing the accuracy of the lemmatization process. These techniques highlight the flexibility and adaptability of NLTK Lemmatization in handling diverse linguistic scenarios.

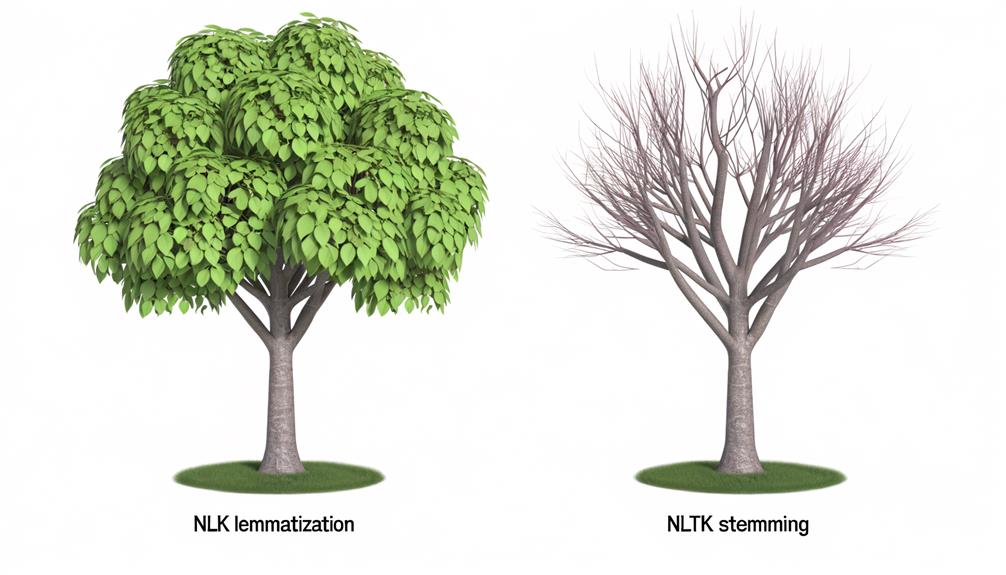

NLTK Lemmatization Vs. NLTK Stemming

Delving into the comparison between NLTK Lemmatization and NLTK Stemming, it becomes evident that these two natural language processing techniques, while similar in function, feature noteworthy differences in their approach and results. Lemmatization accuracy is often preferred over stemming efficiency due to its ability to maintain word sense disambiguation. Rather than simply chopping off affixes like Stemming, Lemmatization considers the context and part of speech of a word, thus preserving its semantics. However, stemming, despite its limitations, is more efficient, making it suitable for large datasets where computational resources are a concern. Consequently, the choice between Lemmatization and Stemming should be dictated by the specific requirements of the task at hand.

Importance of NLTK Word Tokenization

While both lemmatization and stemming are fundamental in natural language processing, it's important to also highlight the role of NLTK Word Tokenization as a prerequisite for effective lemmatization. Tokenization efficiency directly impacts the accuracy of subsequent lemmatization. By dividing text into individual words or tokens, tokenization prepares the text for further linguistic analysis. Each token is then processed independently, which enhances the precision of the lemmatization process. A well-executed tokenization ensures each word is correctly identified, leading to a higher lemmatization accuracy. Without proper tokenization, the lemmatizer might misinterpret the context, resulting in incorrect lemmas. Hence, tokenization stands as an indispensable step in text preparation for the sophisticated processes of linguistic analysis like lemmatization.

Practical Applications of NLTK Lemmatization

The practical applications of NLTK Lemmatization permeate various fields, demonstrating its importance in enhancing the accuracy and efficiency of natural language processing tasks.

- Information Retrieval Systems: With high lemmatization accuracy, keyword-based searches yield more relevant results, enhancing real-world applications like search engines.

- Text Summarization: It aids in summarizing vast information accurately and efficiently, owing to the lemmatization speed and computational efficiency.

- Machine Translation: NLTK Lemmatization helps in improving the nuances of translated content, preserving the contextual meaning.

- Sentiment Analysis: It plays a crucial role in accurately identifying and classifying the sentiments of text data.

Thus, NLTK Lemmatization, given its accuracy and speed, proves to be an invaluable asset in the realm of natural language processing.

Common Challenges in NLTK Lemmatization

Despite the significant advantages of NLTK Lemmatization as mentioned in its practical applications, it is important to acknowledge that this process also faces certain challenges. These lemmatization challenges often revolve around aspects such as part-of-speech ambiguity, incorrect lemma generation, and the absence of certain words in the WordNet database used by NLTK. Improving lemmatization accuracy and performance therefore necessitates effective solutions to these issues.

| Challenge | Impact | Potential Solution |

|---|---|---|

| Part-of-speech Ambiguity | Incorrect lemma generation | Custom POS Tag Mapping |

| Absence of words in WordNet | Inability to find correct lemma | Extend WordNet Database |

| Incorrect Lemma Generation | Reduced lemmatization accuracy | Improve WordNetLemmatizer Algorithm |

These solutions can help in overcoming the common challenges in NLTK lemmatization, paving the way for better results.

Optimizing NLTK Lemmatization for Better Results

Optimization of NLTK's lemmatization process can significantly enhance its accuracy and efficiency, leading to more precise and meaningful linguistic results. To optimize lemmatization, we need to focus on:

- Contextual Analysis: This includes identifying the role of a word in a sentence, which helps in enhancing performance by providing the correct lemma.

- Part of Speech Tagging: By correctly identifying the part of speech, we can improve the lemmatization accuracy.

- Word Tokenization: It is essential for improving efficiency in the lemmatization process.

- Customizing Lemmatization Functions: Depending on the text data, creating custom lemmatization functions can yield better results.

Frequently Asked Questions

What Are the Prerequisites for Using NLTK for Lemmatization?

To use NLTK for lemmatization, certain prerequisites are essential. Firstly, the NLTK installation process must be completed to access its libraries. Secondly, understanding the basics of lemmatization is crucial. It involves reducing words to their base or lemma form. Additionally, knowledge of tokenization and part-of-speech tagging is necessary as they play a significant role in effective lemmatization. Familiarity with these concepts ensures successful application of NLTK for lemmatization.

Does NLTK Lemmatization Support Languages Other Than English?

Language limitations in NLTK primarily restrict its functionality to English language text. NLTK's lemmatization capability is largely tailored for English, making it less effective for other languages. However, expanding NLTK's linguistic range is possible by incorporating additional resources or data sets, though this requires substantial effort. In essence, while NLTK is a powerful tool for English language processing, its support for lemmatization in other languages is limited.

Is There a Way to Improve Accuracy in NLTK Lemmatization?

To improve accuracy in NLTK lemmatization, consider addressing lemmatization challenges such as handling out-of-vocabulary words and homonyms. Enhancing the underlying dictionary and training custom models with domain-specific data can help. Additionally, NLTK offers customization possibilities, such as augmenting the WordNetLemmatizer with part-of-speech tagging, to better understand the context of words. Ultimately, the accuracy of lemmatization largely depends on the quality and appropriateness of the underlying linguistic resources.

How Do I Handle Unknown Words or Slang in NLTK Lemmatization?

Handling unknown words or slang in NLTK lemmatization presents a unique challenge. For slang or Internet abbreviations, creating a custom dictionary can improve accuracy. This involves lemmatizing Internet slang and handling abbreviations in lemmatization. However, it's essential to maintain the balance between comprehensive coverage and computational efficiency. The approach would require continuous updating and refinement to stay relevant with evolving language trends.

Can NLTK Lemmatization Be Used With Other Text Processing Libraries?

Yes, NLTK lemmatization can indeed be integrated with other text processing libraries. This integration flexibility enhances the overall text analysis process by combining the strengths of various libraries. For instance, NLTK's comprehensive linguistic resources can be coupled with the machine learning capabilities of libraries like Scikit-learn. The benefits of this combined usage result in more accurate and nuanced text analysis, proving the versatility and adaptability of NLTK lemmatization.